Project

About project

About project

About project

Bus Factor is a service that automates control over the behavior of public transport drivers using machine learning and computer vision algorithms.

We were approached by the Termotech company, which needed to automate and optimize control over public transport drivers. More specifically, it was necessary to automatically understand that:

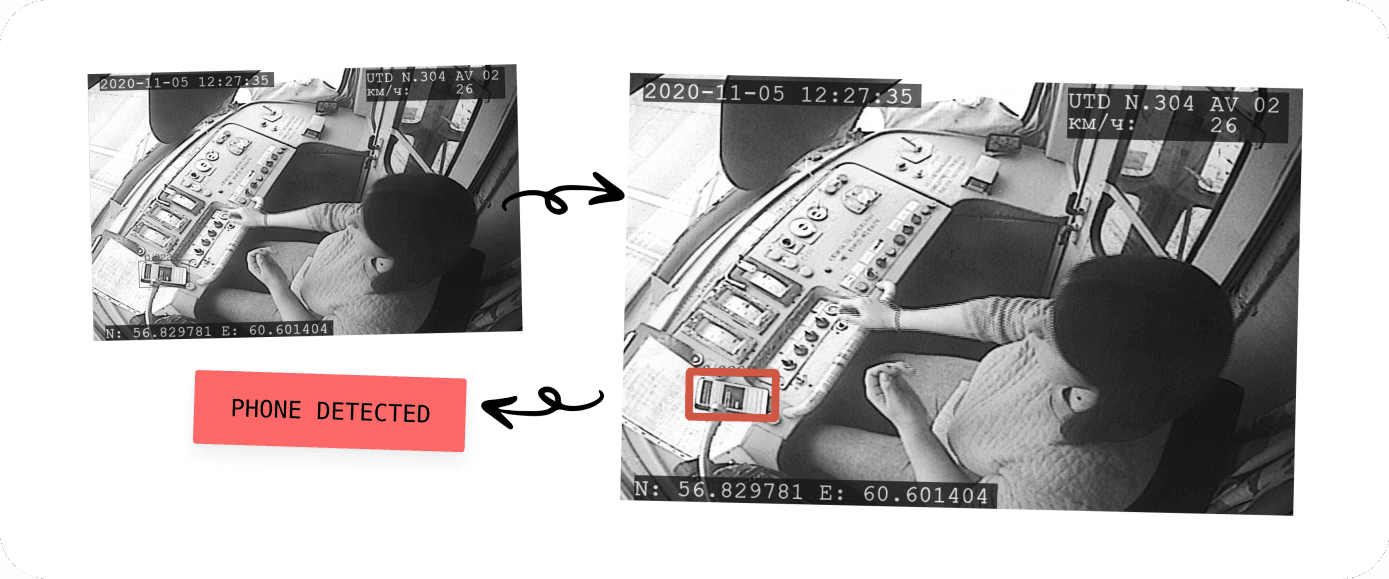

- the public transport driver does not use the phone while driving;

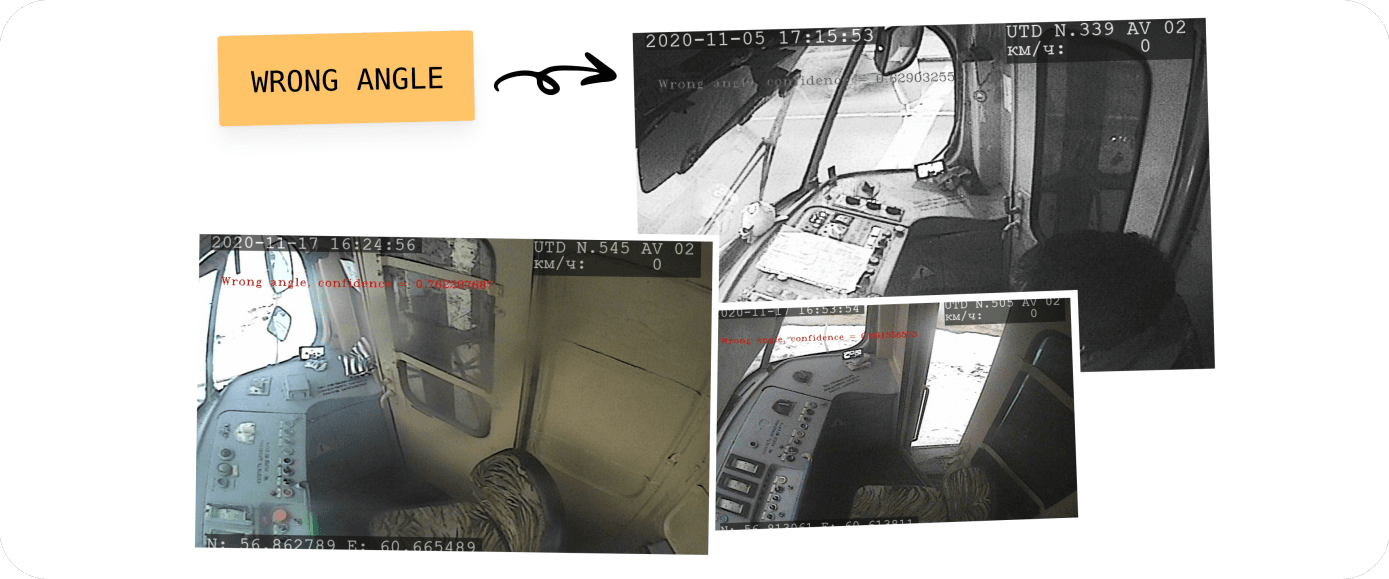

- the camera installed in the driver's cabin shoots from the right angle, is not turned to the side;

- there is a signal from the camera, it is not damaged, not curtained or dirty.

At the time of contacting us, the task was being solved by Termotech employees — they were manually reviewing all the recordings from CCTV cameras in the driver's cab. This approach took a huge amount of time and still led to errors based on the human factor.

Project in numbers

0 hoursDesign

0 hoursDevelopment

0 hoursMachine learning

How does it work?

How does it work?

How does it work?

- The cameras are installed in the cabs of public transport drivers;

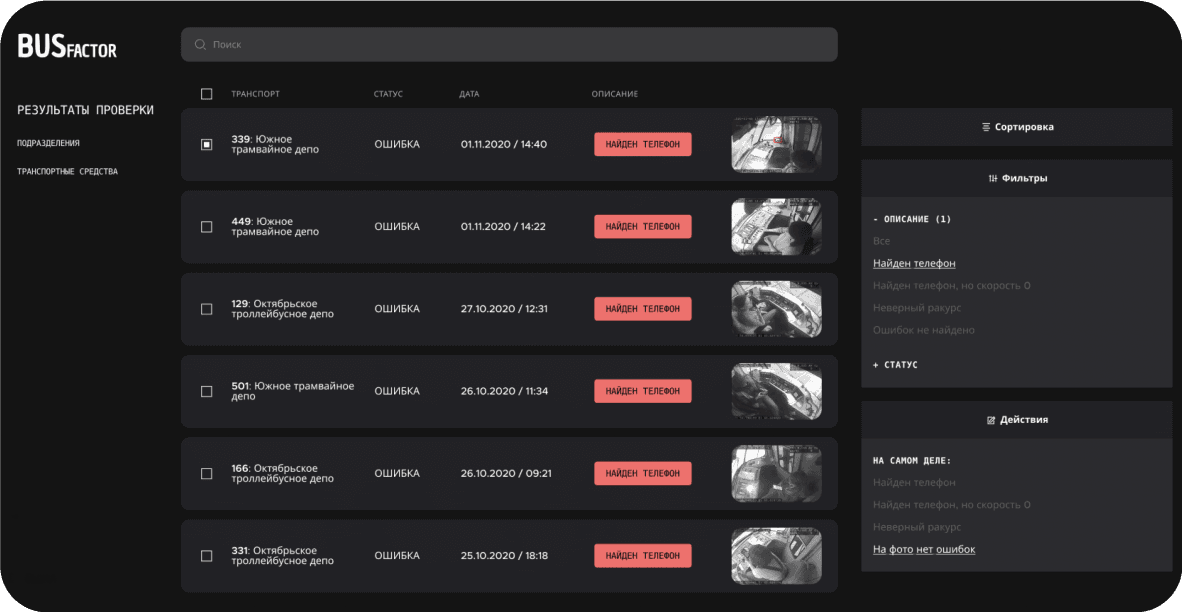

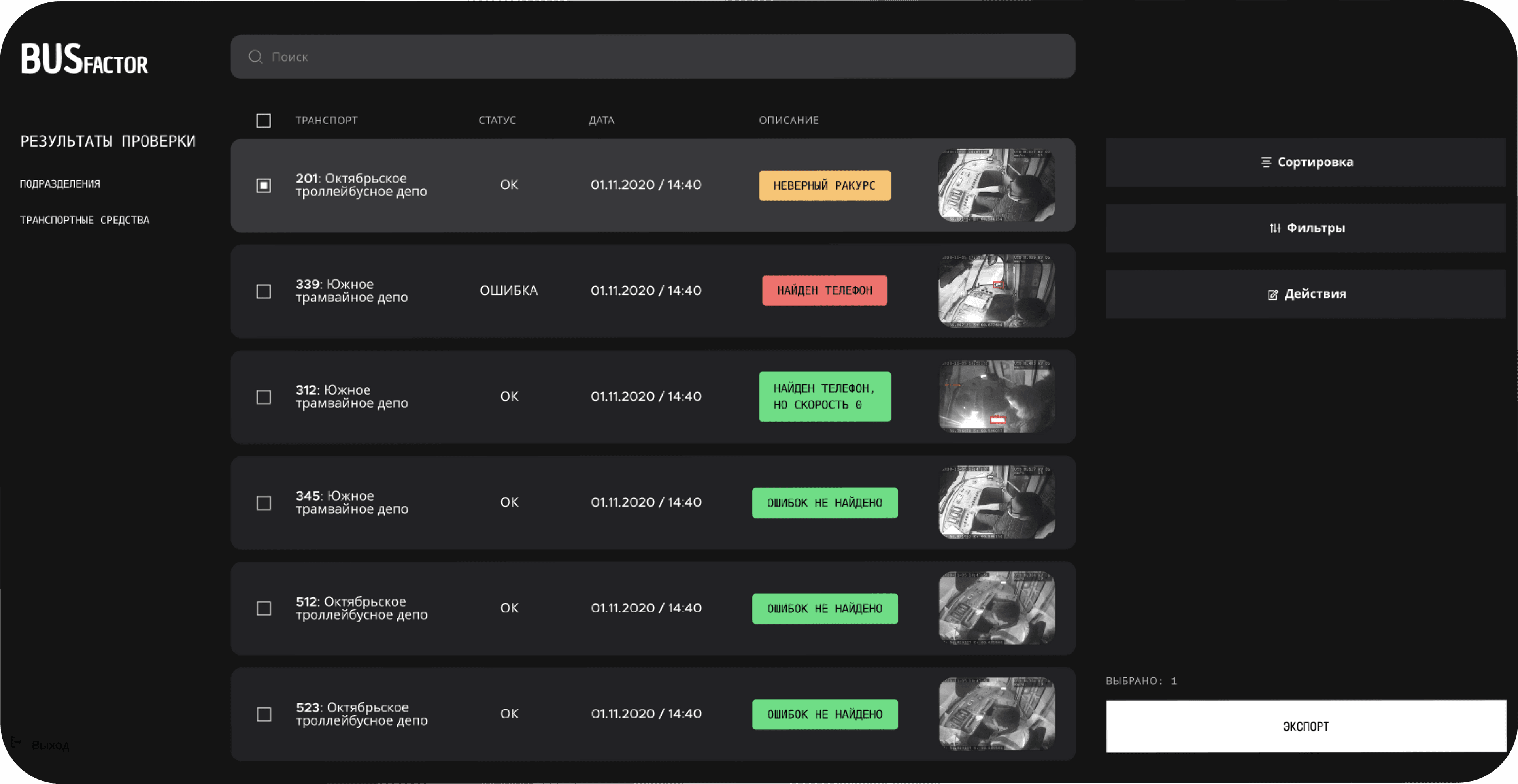

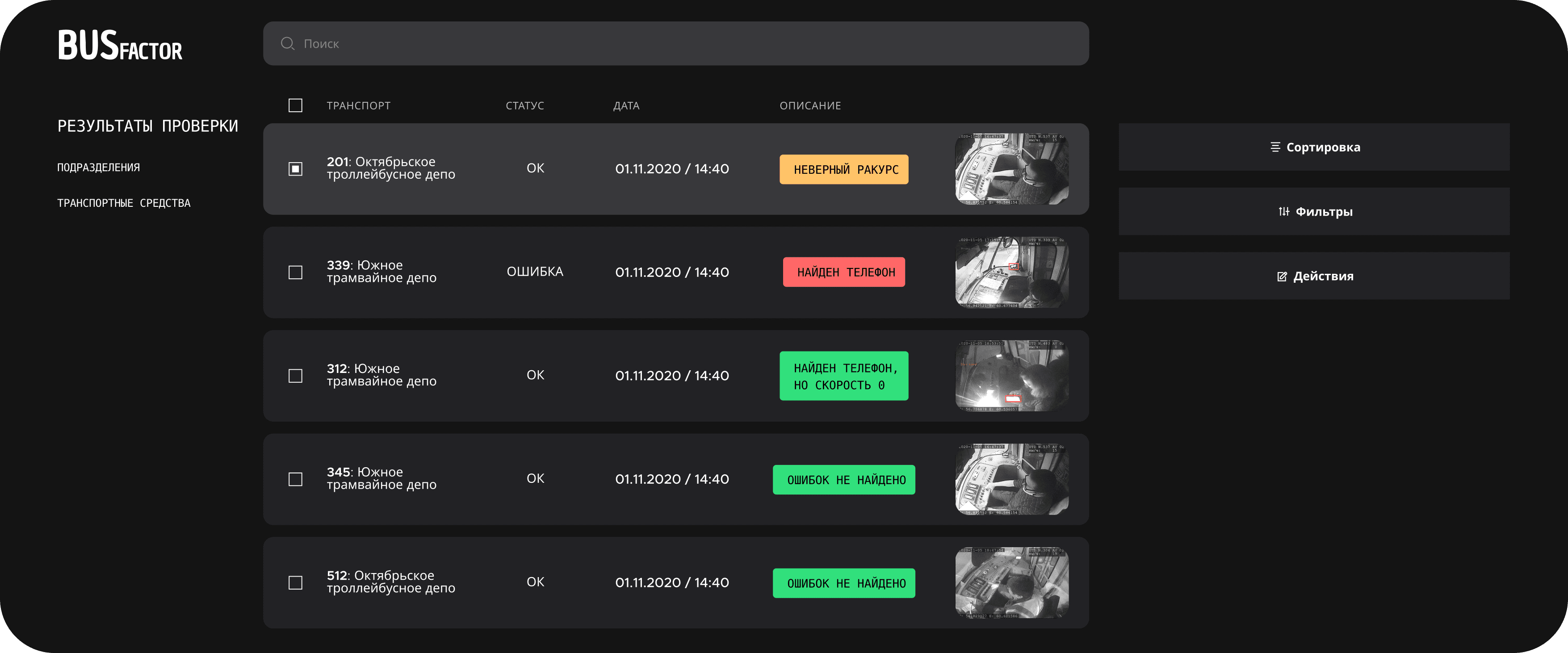

- The Bus Factor system receives photos from the cameras via API, analyzes them for violations, sets each photo with a status corresponding to the detected violations;

- The client's employee sees all the records with vehicles that have violations and takes appropriate actions;

- If an erroneous operation of the system is detected, the client's employee can mark the erroneous status, and subsequently this photo will be used with the correct label when retraining the neural network.

Result

Result

Result

We have developed a system that integrates with the API to get a photo from the camera, checks if there is a phone in the frame, if the angle is correct and if there are any other problems with the camera. After the checks, the system sets the appropriate status for the image.

Later, the person checks only for images flagged by the system as having violations (the system is set up to miss almost no violations and rather give a false positive result).

Searching for a phone in the frame, checking the angle and operation of the camera are implemented using neural networks.

The introduction of our system has reduced the cost of man-hours by 30 times. Operators now only need to double-check some of the machine's responses, instead of watching all the videos themselves.

The client notes that with the advent of our technology, the discipline of drivers has increased significantly.

ML

BACKEND

Our super team

AntonAndroid

AntonAndroid FedorBackend

FedorBackend DanilML

DanilMLBackend

Backend

Backend

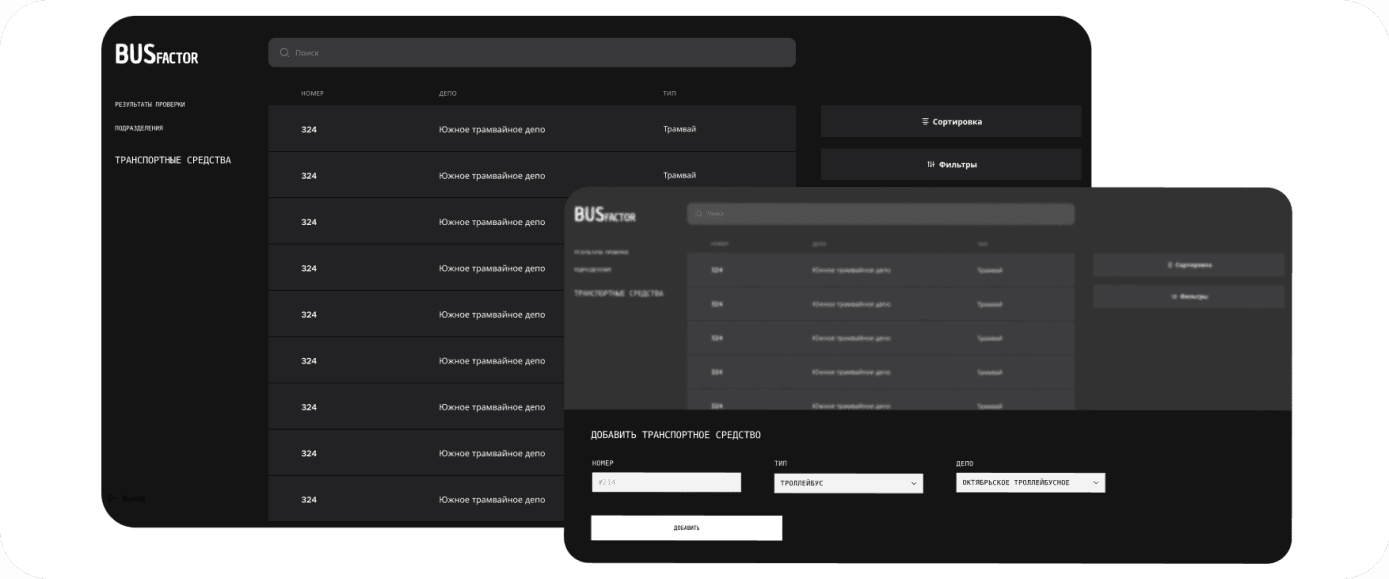

For the operation of the system, a backend was developed that receives photos from public transport through the video system API. Photos were analyzed for errors and stored on the server. In the administrative panel, you could see the errors found in the photo. If the error detection system did not work correctly, the operator could mark the photo as incorrectly processed and indicate what type it really needed to be attributed to: in this way, data was collected for further training of neural networks.

In case of errors in the system, monitoring was set up, which sent developers a signal about problems.

For development, the Python language and the Django framework were used.

Machine learning

Machine learning

Machine learning

To train the neural network, together with the client, a dataset of 40,000 images was collected and labeled. The images included drivers with and without a phone, photos with incorrect angles, or other display errors. The photographs were of a varied quality: often the image was not very clear, some cameras were black and white, many pictures were taken in poor lighting, sometimes at night. Also, the classes of the images were highly unbalanced: most of the photos did not have a phone or an erroneous angle.

The first approach using machine learning was to classify photos based on the presence of a phone or the wrong angle. In finding the phone presence class, this approach proved to be not very accurate. An analysis of the operation of the neural network through the construction of a heatmap showed that the network can hardly find the phone and distinguish it from other similar objects that may be on the driver's panel.

Then, instead of the classification we formalized the problem being solved as a detection problem: we marked out the bounding-boxes of phones on the images and used the network to detect objects. The presence of phone markings additionally allowed the use of more image augmentations: now we excluded the case in which, in case of incorrect cropping, the phone could disappear from the frame, while the photo would remain in the class with the presence of a phone.

The solution of the problem through detection made it possible to achieve the desired accuracy. Detection was implemented via a neural network with the YOLOv3 architecture.

The solution was created using the Python language and the Tensorflow + Keras frameworks, and Tensorflow-Serving was used for deployment.

Review