Project

About project

About project

About project

The annual IT conference Agency Growth Day, dedicated to finding new business models, launching products, and entering new markets, took place in Ekaterinburg on October 24th. Over the course of seven hours, with two short breaks, six speakers delivered half-hour presentations to the audience, followed by a panel discussion — representatives of three companies made brief presentations and formulated requests to the experts, while the experts asked questions and offered solutions to problems.

When such a lengthy event takes place — with many consecutive presentations and discussions — it's not easy for guests to remain engaged listeners throughout. People want to chat with colleagues, participate in activities, and make new connections, or simply take a break for coffee. Of course, you can watch the broadcast later, but it's long, and besides, it will all be after everyone has left. What if something important for you is mentioned in a presentation while you're distracted, and you could discuss it with the speaker right away while they're available?

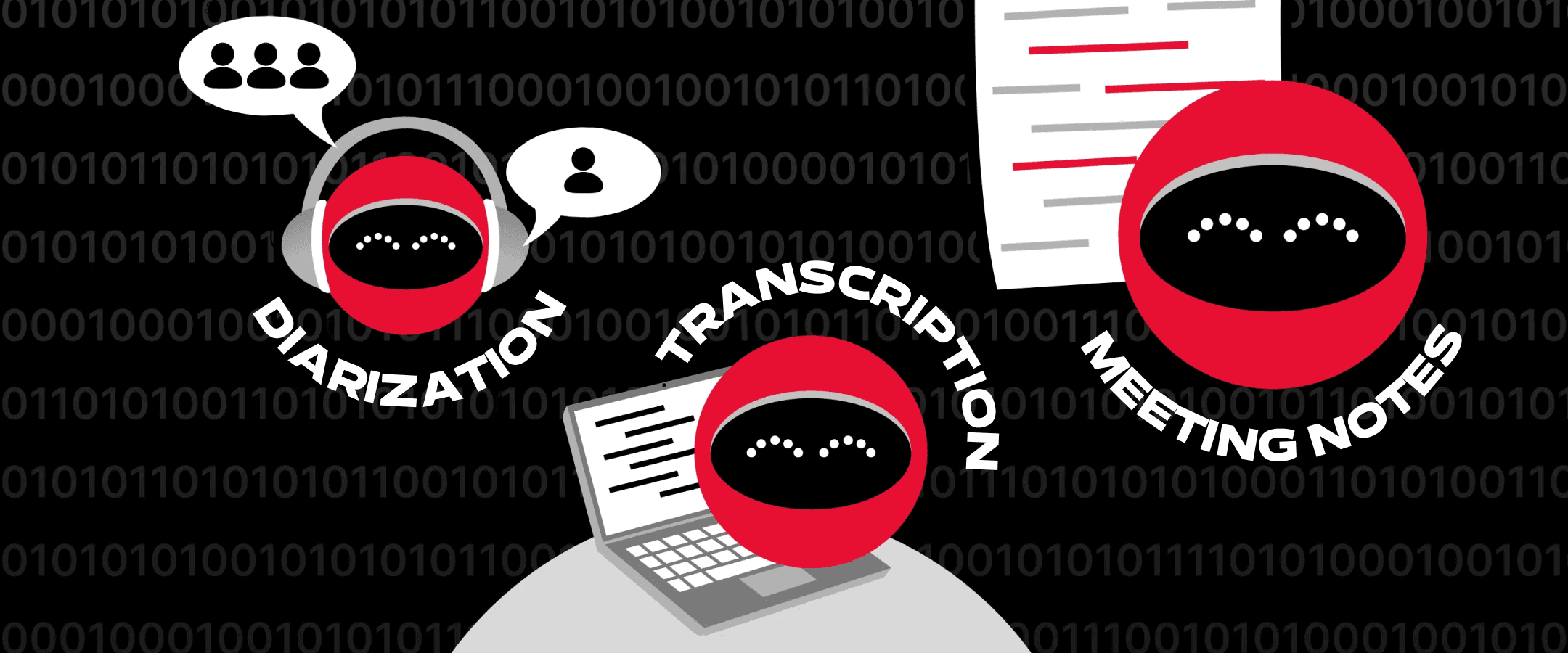

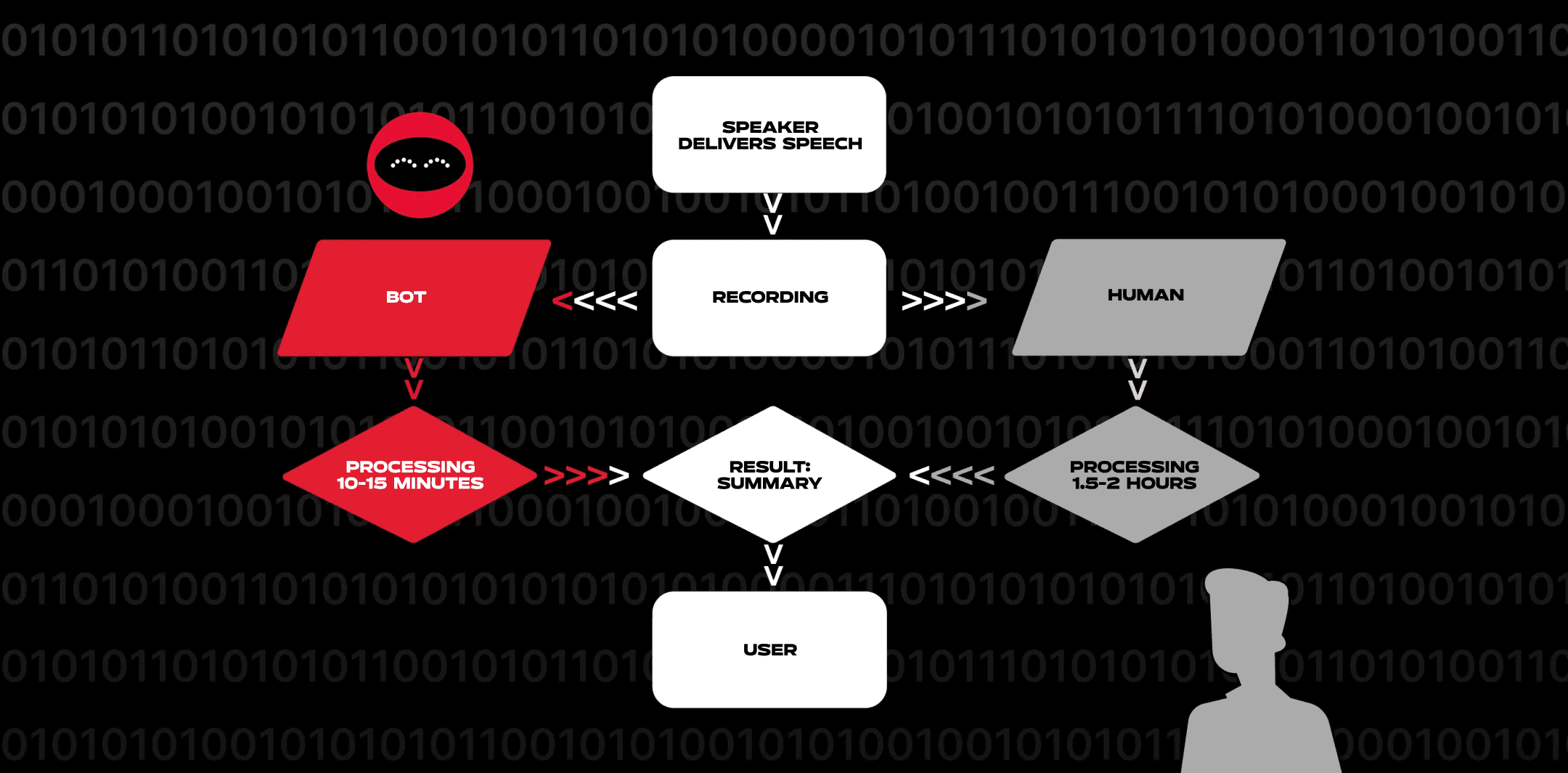

The Doubletapp team not only acted as the design partner of the event but also ensured that no one missed anything: during Agency Growth Day, we used our own transcription bot to prepare brief summaries (meeting notes) of all the presentations and panel discussions. Content processing and publication were done in real-time, with meeting notes posted 10–15 minutes after each presentation. Our texts were published not only on the event's social media but also included in the final newsletter for participants along with photos and presentation slides.

Task

Task

Task

The ML department at Doubletapp developed a transcription bot to address our work-related tasks: Doubletapp employees and clients are scattered around the world, and we need a tool to transcribe conference calls, convert long discussions into short summaries, promptly record results, and confirm agreements.

When we were preparing for Agency Growth Day, we realized that we could demonstrate to participants the benefits of our product, helping them not to get lost in the information flow and get the most out of the conference. Doubletapp CEO Sergey Anchutin proposed to the organizers to publish in the conference's Telegram channels the current meeting notes of the presentations: this way, those attending the event could engage more substantively with the speakers, relying on the summaries of their presentations, and afterward, refresh their memory of important points. Those who couldn't attend or didn't follow the live stream continuously would receive brief summaries and could selectively study what interested them.

We were tasked with preparing and releasing brief summaries of the six presentations scheduled for the first part of the event, while subsequent discussions with a large number of participants (not always with microphones) were processed as much as possible. But the bot processed everything quickly and smoothly, so we continued our work and prepared six more summaries—on the presentations of panelists and discussions by experts.

Solution

Solution

Solution

Doubletapp's ML department excels at understanding the client's task and solving it precisely and economically. For example, we have developments based on machine learning and computer vision algorithms:

• Watchmen (a device and administrative panel for car-sharing services — driver identity verification and behavior control)

• Bus Factor (a device and administrative panel for use in public transport — automation of driver behavior control)

Another project is an iOS application for practical shooting training called Hit Factor Shots Analysis. Here, the ML team trained a neural network to accurately recognize gunshot sounds and ported the trained network to the end device.

In April 2023, the ML department began working on an internal product — a transcription bot. While there were ready-made solutions available, we were not satisfied with their performance with Russian speech. Additionally, we implemented speaker diarization, making it visible in the transcription which words belong to whom — a feature not commonly found elsewhere. We needed our own stable tool whose performance we could control and improve the toolkit as needed.

Result

Result

Result

In total, during the 7-hour event, we processed 12 presentations ranging from 8 to 49 minutes in duration. The average processing time was about 15 minutes, with the shortest file processed in 8 minutes and the longest in 28 minutes. File sizes ranged from 14.7 MB to 401 MB. There were no failures.

The summaries prepared by the bot were included in the distribution to participants by the organizers, along with photos and speaker presentations.

After our CEO's presentation and announcement in the conference channels, 47 people used the bot, and we received several partnership proposals. For instance, we are currently working on customizing the bot for a client's needs (we added file formats convenient for them to upload) — a client engaged in developing applications and websites for commercial medicine in Ekaterinburg reached out to us. The company needed such an electronic secretary for meeting minutes — previously they used voice recordings and hired a transcriptionist, but now they can more efficiently utilize human resources. An additional advantage for us was that the files uploaded by the client are accessible only to them — allowing the processing of confidential information.

We are ready to enhance the product together with interested clients to solve their business tasks. Currently, we are developing search tools for uploaded files and solutions to connect the bot directly to Zoom or Google Meet calls, enabling immediate results after the call ends.

ML

Our super team

AntonAndroid

AntonAndroid KirillML

KirillML IgorBackend

IgorBackend KirillBackend

KirillBackendProcess

Process

Process

Work on the product began in April 2023. We studied the existing tools on the market — they performed poorly with Russian-language content, so we started developing our own solution for internal processes. When preparations for the conference began, work entered the final stretch. The bot was designed with a large margin of durability: requests are processed asynchronously (with room for multiple simultaneous queries), and the technologies were chosen to handle large files simultaneously — up to 2 GB (Telegram's limitation).

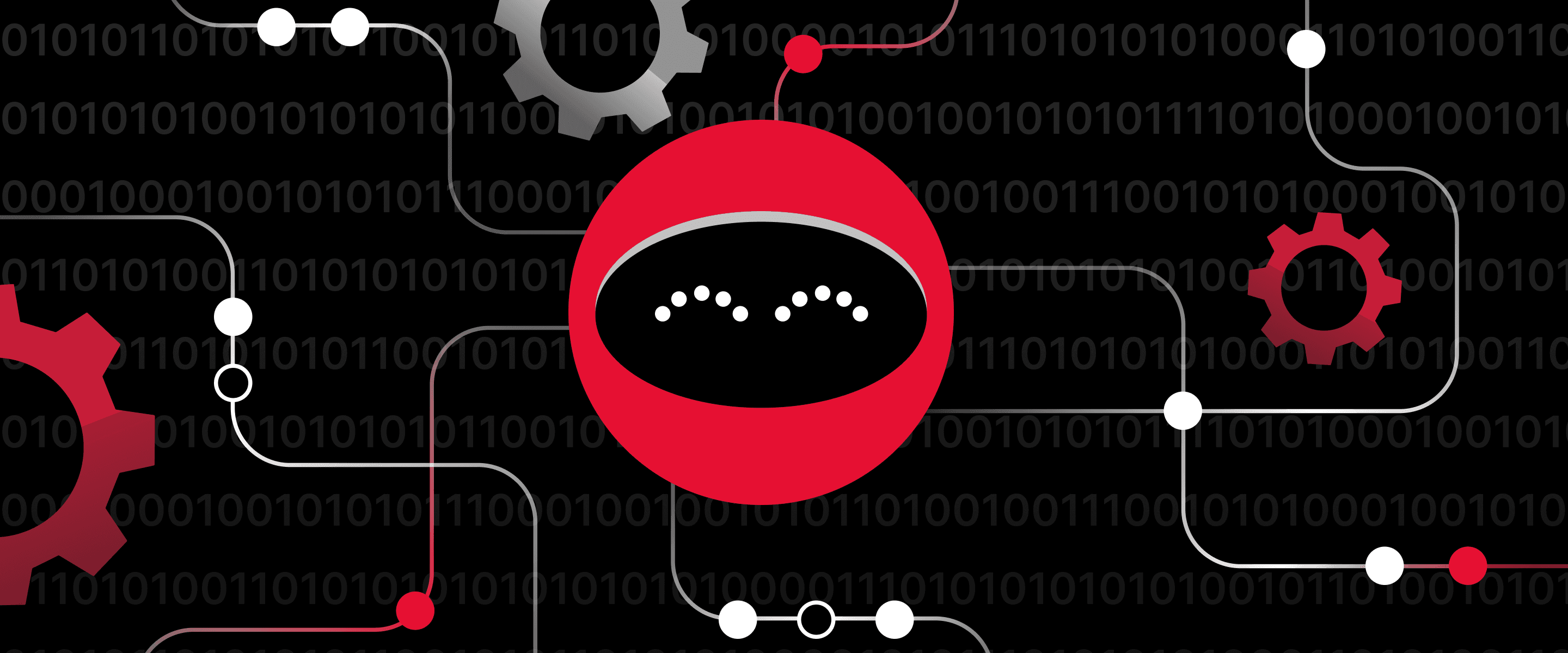

The transcription bot operates based on three neural networks:

1) Diarization (determining how many people are speaking and detecting each person's utterances). At this stage, we use a neural network deployed on our server.

2) Transcription (converting speech into text). At this stage, we use Whisper (OpenAI's free speech recognition system) — there is currently nothing better in the world for solving this task in general, and it can be realistically fine-tuned for specific cases. Whisper can be deployed internally or paid for through APIs from services where it's already deployed.

3) Meeting notes / summaries. We use GPT-4, where prompt engineering plays a significant role — we select prompts from the existing API to solve the task most effectively.

To maximize speed, we implemented parallel processing of large files and excluded some steps where possible — for example, diarization is not necessary for a single speaker, and some audio files do not need to be converted, and so on.

To enable monetization, we had to create our own solutions. Since Pyrogram (the library for creating Telegram bots) does not have ready-made methods for payments, we had to develop them ourselves using what this library provides.

Sergey Anchutin, CEO of Doubletapp:

"The current solution is a quick MVP that we have been using within the company for six months and quickly adapted to the conference format to launch a wave of discussions and networking at the event.

In general, if I were to give advice, it's better to use the most ready-made solutions for quick decisions and hypothesis testing, spending less time on the details and more on the essence of the product. And when it comes to improving quality, then perform fine-tuning and use custom neural networks.

And try our bot for free at".